We recently had the need for one of our testing folks to be able to access DevOps API to get more information from DevOps Test Plans. He tried to get the information he was needing thru built in reports and even connecting Power BI to DevOps but still wasn’t able to get at what he needed. He discovered he could get the information he was after by using DevOps API and OData queries.

Problem was that he didn’t have the proper tools to call the API other than just Chrome browser. Normally our Integration developers will use Postman to connect, develop and test against API connections, but they do this using Azure Virtual Desktops. Our corporate machines are locked down (no local admin access) so users can’t install software on them. Our tester doesn’t have access to an Azure Virtual Desktop we we need to find another option.

Step 1: Find a client

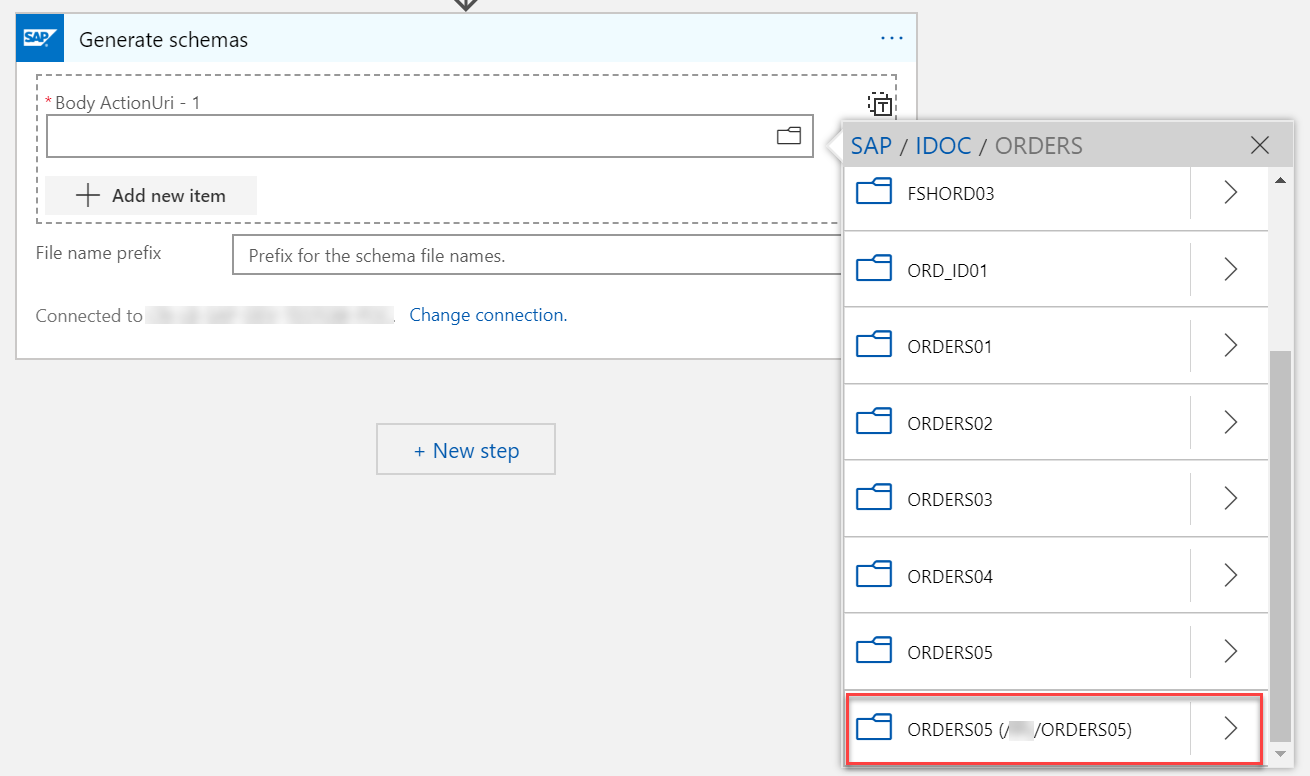

I’m a big fan of Visual Studio Code (VSC) and was delighted to find out we could use it to test our Rest calls. The great thing about VSC is you do not have to have local admin rights to install it! Out of the box VSC does not have a rest client, but another great feature of VSC is all the extensions that you can install on it.

One of these extensions is Rest Client by Huachao Mao, the extension allows you to make HTTP requests and see the responses. It has almost a million downloads and 5 stars so you know it’s a good extension. It has really good documentation as well.

Step 2: Authentication

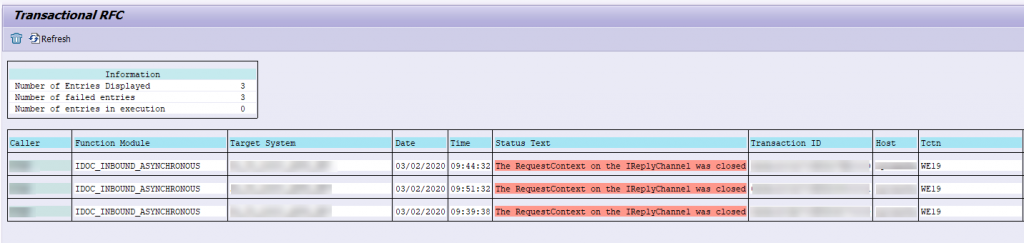

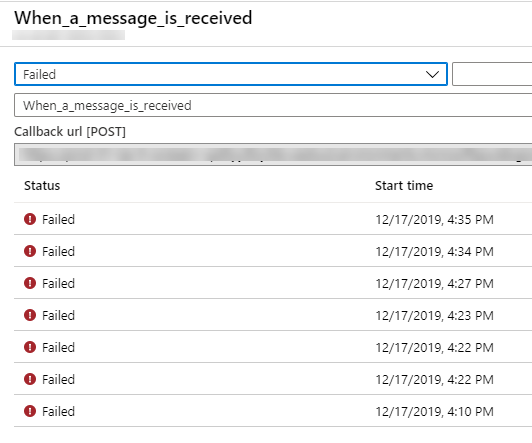

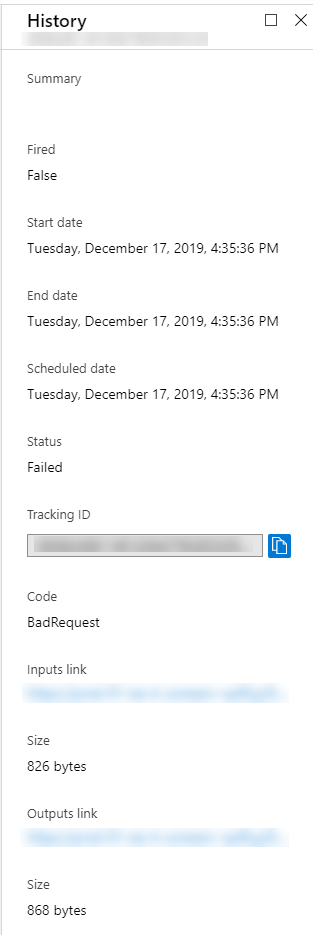

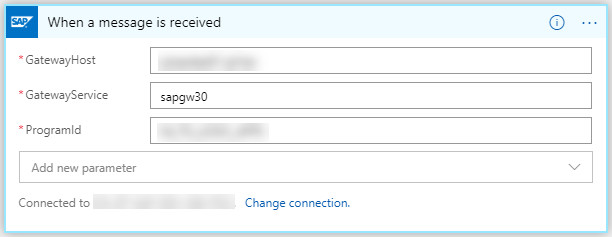

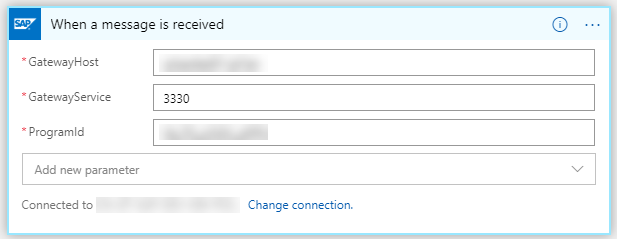

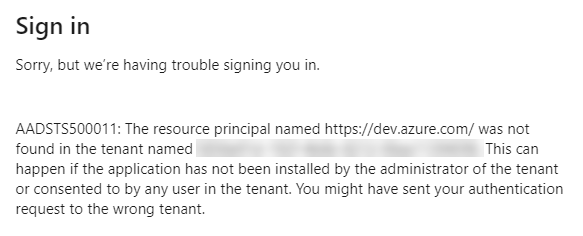

The rest client has a lot of different authentication options, so I first attempted to get it working with Azure Active Directory. But unfortunately when trying to connect I was getting the following error:

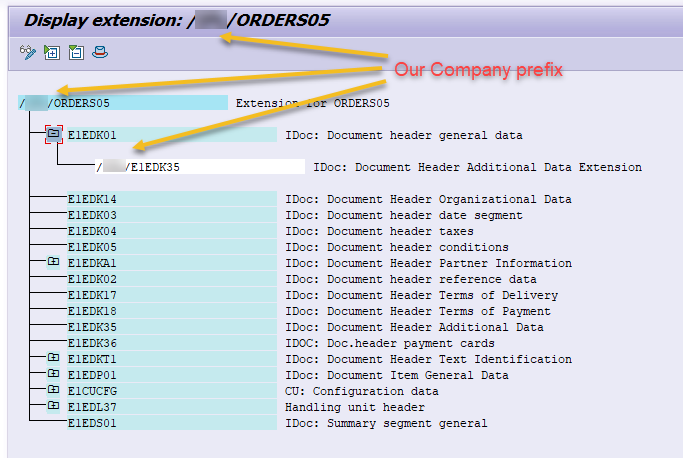

So we needed to have this endpoint added an an application in Azure Active Directory. I don’t have the proper permissions to do this at my company and wasn’t patient enough to get it created thru the proper channels. Luckily a colleague reminded me of Personal Access Tokens (PAT) in Azure DevOps. Personal Access Tokens allow you to create a token that you can then use use to connect to Azure DevOps.

Follow Microsoft’s documentation here to create a PAT:

https://docs.microsoft.com/en-us/azure/devops/organizations/accounts/use-personal-access-tokens-to-authenticate

Step 3: Send API Request

To create the request, Create New File in VSC and be sure to change the file type to “HTTP”.

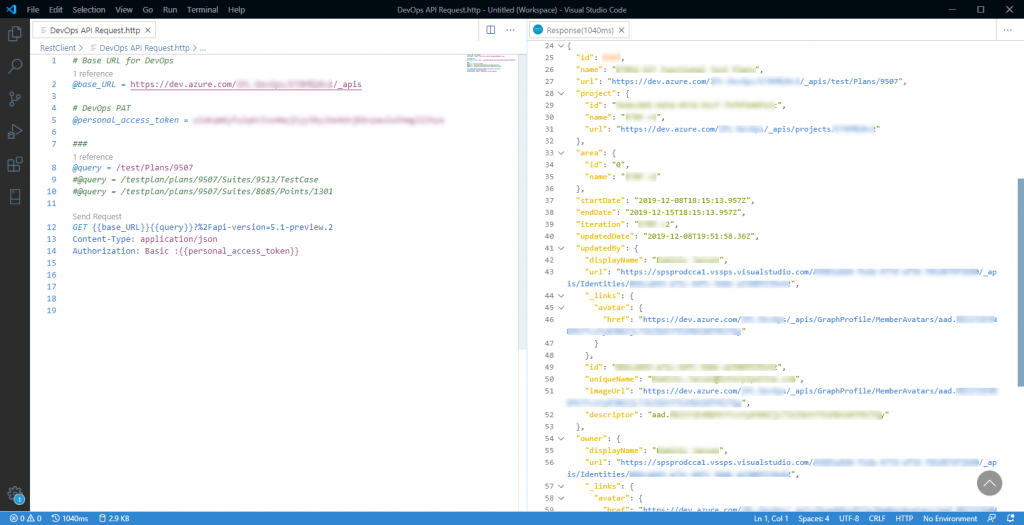

Here is the script we run to get a specific Test Plan via the API:

# Base URL for DevOps

@base_URL = https://dev.azure.com/<MyOrganization>/<MyProject>/_apis

# DevOps PAT

@personal_access_token = <Personal Access Token>

###

@query = /test/Plans/<Test Plan ID>

GET {{base_URL}}{{query}}?%2Fapi-version=5.1-preview.2

Content-Type: application/json

Authorization: Basic :{{personal_access_token}}You will need to update this script with your Company ID, Project ID and the PAT you created in the previous step. As well in this query, this will return a Test Plan by the ID you specify.

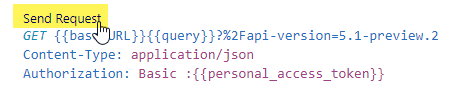

When using the Rest Client, you will see a “Send Request” appear above our “GET” Verb.

After running the request, your response will open up on right side (or below based on your settings), with the results of your query.

Step 4: Send OData Request

Just like calling API’s like in Step 3, here is the script to make an OData Query.

# Base URL for DevOps

@apibase_URL = https://analytics.dev.azure.com/<MyOrganization>/<MyProject>/_odata/v3.0-preview/WorkItems

# DevOps PAT

@personal_access_token = <Personal Access Token>

###

#@query = ?<OData Query>

@query = ?$select=WorkItemId,Title,WorkItemType,State&$filter=WorkItemId eq 8594&$expand=Links($select=SourceWorkItemId,TargetWorkItemId,LinkTypeName;$filter=LinkTypeName eq 'Related';$expand=TargetWorkItem($select=WorkItemId,Title,State))

GET {{apibase_URL}}{{query}}

Content-Type: application/json

Authorization: Basic :{{personal_access_token}}

Conclusion

Hopefully this gives you a good sample of how you can use Visual Studio Code as a Rest Client to do some developing and testing against API’s. In particular in this case this shows you how to make API calls and OData queries against Azure DevOps Test Plans.

Let me know if this was helpful.